The uncanny valley sits at the crossroads of fascination and dread — that eerie moment when a robot, doll, or CGI character looks almost human, but not quite. Our brains recoil. Eyes that blink a little too slowly, smiles that linger too long, skin that’s almost alive — all create an instinctive unease that’s part curiosity, part horror.

“The uncanny valley isn’t about machines failing to look real,” explains Dr. Masahiro Mori, the Japanese roboticist who coined the term in 1970. “It’s about what happens when they succeed too well.”

The Origin Of The Uncanny Valley

Mori’s original hypothesis described how human comfort increases as robots appear more lifelike — until a sudden drop in emotional response occurs when the likeness becomes too close. That dip, the “valley,” is the zone of unease where near-human forms evoke discomfort or fear.

In psychology, this response is linked to cognitive dissonance — the brain struggles to categorize what it sees. Something looks human, but the micro-details (eye movement, breath rhythm, emotional timing) betray artificiality.

| Stage | Example | Emotional Reaction |

|---|---|---|

| Mechanical Robot | Industrial robot arm | Neutral curiosity |

| Humanoid Robot | ASIMO, Atlas | Warm interest |

| Near-Human Robot/CGI | Androids, deepfake faces | Disturbance, rejection |

| Fully Human | Actual person | Comfort restored |

The Psychology Of Discomfort

Why do almost-human faces unsettle us so profoundly? Neuroscience points to two conflicting brain systems:

The Fusiform Face Area (FFA) — recognizes human faces.

The Amygdala — detects threat and emotional irregularity.

When the FFA identifies a face but the amygdala detects “something wrong,” an internal alarm triggers. This mismatch produces fear and revulsion, similar to reactions toward disease or death cues.

“The uncanny valley is evolution’s error detection system,” says Dr. Karl MacDorman, cognitive scientist at Indiana University. “It warns us that something looks alive but isn’t.”

Evolutionary Roots Of The Uncanny

Several theories propose that the uncanny valley evolved as a survival mechanism. Early humans learned to detect subtle signs of illness, death, or predation. Faces that appeared “off” — pale skin, rigid posture, lifeless eyes — signaled danger.

Modern robotics and CGI inadvertently mimic these same cues.

A hyperrealistic android’s stillness may resemble a corpse; overly symmetrical features may hint at disease mutation rather than beauty.

| Evolutionary Cue | Modern Trigger | Instinctive Response |

|---|---|---|

| Corpse-like stillness | Robots lacking micro-movements | Disgust |

| Asymmetrical expression | Poor facial animation | Anxiety |

| Lifeless gaze | Camera misalignment in CGI | Fear |

| Over-smooth skin | Unreal textures | Suspicion |

The Role Of Empathy And Identity

Humans are wired for emotional mirroring — our mirror neurons activate when observing real expressions. But when those expressions are mechanically delayed or incomplete, empathy fails.

That failure creates psychological distance: the entity seems familiar yet unreachable.

This duality — human yet not human — challenges our sense of identity.

“It’s a mirror that reflects humanity minus the soul,” said philosopher David Levy, author of Love and Sex with Robots.

How Technology Deepened The Valley

Recent advances in CGI, AI avatars, and robotics have brought us closer to the valley’s edge than ever.

Deepfakes blur the boundary between authenticity and simulation.

Virtual influencers like Lil Miquela challenge emotional authenticity online.

AI voice synthesis replicates empathy without understanding it.

The result is not fear of machines — it’s fear of emotional deception. When we can’t tell real from artificial, trust becomes fragile.

| Technology | Psychological Effect |

|---|---|

| Deepfake videos | Loss of reality anchors |

| Humanoid robots | Threat to human uniqueness |

| AI chatbots with voices | Emotional confusion |

| CGI actors in film | Discomfort despite realism |

Case Study Cinema’s Creepiest Experiment

When The Polar Express (2004) debuted, audiences marveled at its realism but felt uneasy. Critics described the characters’ eyes as “lifeless” and “zombie-like.”

Later films — Beowulf, Final Fantasy: The Spirits Within, even Rogue One’s digital Princess Leia — all suffered similar reactions.

It wasn’t until Avatar and The Last of Us Part II that animators learned to avoid the valley by exaggerating human imperfections: asymmetry, blink irregularity, micro-sweats.

“Perfection is not the goal,” said visual designer Weta Digital’s Joe Letteri. “Believability comes from flaws.”

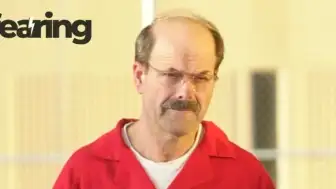

When Machines Become Too Human

Social robots like Sophia and Ameca demonstrate that personality can heighten unease. Their speech rhythms and humor feel almost natural — until milliseconds of awkward delay break the illusion.

In lab studies, participants described such robots as “unsettling,” “soulless,” or “possessed.”

MRI scans confirmed amygdala activation similar to that seen when viewing images of corpses.

“Our brains treat them like living contradictions,” explains Dr. Ayse Saygin, UC San Diego neuroscientist. “They move like humans but lack biological cues, which confuses our threat systems.”

Escaping The Valley

Designers now aim to climb out of the valley by balancing realism and stylization.

This strategy — known as functional anthropomorphism — gives machines human traits without triggering full empathy mismatch.

Examples include:

Pixar characters: expressive but cartoonish faces.

Boston Dynamics robots: clearly mechanical, yet relatable.

Meta’s avatars: simplified to maintain emotional clarity.

| Approach | Outcome |

|---|---|

| Hyperreal human replication | Triggers unease |

| Stylized exaggeration | Encourages comfort |

| Transparent artificiality | Builds trust |

The key insight: people trust robots more when they don’t pretend to be people.

The Future Fear Of AI Faces

As generative AI produces increasingly realistic digital humans, the uncanny valley becomes less about visual imperfection and more about moral ambiguity.

When a voice sounds real but the emotions are synthetic, we confront a deeper fear — not of machines, but of manipulation.

“The next uncanny valley is psychological,” warns MIT’s Sherry Turkle. “It’s the point where imitation becomes indistinguishable from empathy.”

FAQ

Q1: Can we ever eliminate the uncanny valley?

A1: Possibly, but it may require redesigning empathy itself — making machines emotionally legible rather than human-like.

Q2: Why do some people enjoy uncanny imagery?

A2: Artists and horror fans often experience aesthetic thrill — safe exposure to discomfort enhances curiosity.

Q3: Are AI companions part of the uncanny valley?

A3: Yes, especially those using realistic voices or faces without genuine emotion.

Q4: Does culture affect uncanny perception?

A4: Studies show Japanese participants tolerate humanoid robots better due to long cultural familiarity.

Q5: Will future generations stop feeling this fear?

A5: Possibly. As exposure increases, desensitization may flatten the valley — though new fears may arise with AI autonomy.

Sources

IEEE Spectrum – Revisiting Mori’s Uncanny Valley

Nature Neuroscience – Neural Responses to Human-Like Robots

MIT Technology Review – The Psychology of the Uncanny Valley